Ordinary differential equation

In mathematics, an ordinary differential equation (or ODE) is a relation that contains functions of only one independent variable, and one or more of their derivatives with respect to that variable.

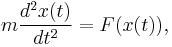

A simple example is Newton's second law of motion, which leads to the differential equation

for the motion of a particle of constant mass m. In general, the force F depends upon the position x(t) of the particle at time t, and thus the unknown function x(t) appears on both sides of the differential equation, as is indicated in the notation F(x(t)).

Ordinary differential equations are distinguished from partial differential equations, which involve partial derivatives of functions of several variables.

Ordinary differential equations arise in many different contexts including geometry, mechanics, astronomy and population modelling. Many mathematicians have studied differential equations and contributed to the field, including Newton, Leibniz, the Bernoulli family, Riccati, Clairaut, d'Alembert and Euler.

Much study has been devoted to the solution of ordinary differential equations. In the case where the equation is linear, it can be solved by analytical methods. Unfortunately, most of the interesting differential equations are non-linear and, with a few exceptions, cannot be solved exactly. Approximate solutions are arrived at using computer approximations (see numerical ordinary differential equations).

Contents |

Definitions

Ordinary differential equation

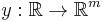

Let y be an unknown function

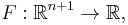

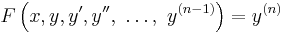

in x with  the nth derivative of y, and let F be a given function

the nth derivative of y, and let F be a given function

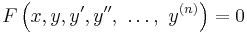

then an equation of the form

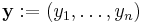

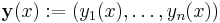

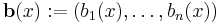

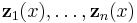

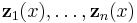

is called an ordinary differential equation (ODE) of order n. If y is an unknown vector valued function

,

,

it is called a system of ordinary differential equations of dimension m (in this case, F : ℝmn+1→ ℝm).

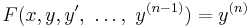

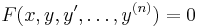

More generally, an implicit ordinary differential equation of order n has the form

where F : ℝn+2→ ℝ depends on y(n). To distinguish the above case from this one, an equation of the form

is called an explicit differential equation.

A differential equation not depending on x is called autonomous.

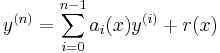

A differential equation is said to be linear if F can be written as a linear combination of the derivatives of y together with a constant term, all possibly depending on x:

with ai(x) and r(x) continuous functions in x. The function r(x) is called the source term; if r(x)=0 then the linear differential equation is called homogeneous, otherwise it is called non-homogeneous or inhomogeneous.

Solutions

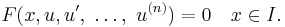

Given a differential equation

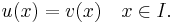

a function u: I ⊂ R → R is called the solution or integral curve for F, if u is n-times differentiable on I, and

Given two solutions u: J ⊂ R → R and v: I ⊂ R → R, u is called an extension of v if I ⊂ J and

A solution which has no extension is called a maximal solution. A solution defined on all of R is called a global solution.

A general solution of an n-th order equation is a solution containing n arbitrary variables, corresponding to n constants of integration. A particular solution is derived from the general solution by setting the constants to particular values, often chosen to fulfill set 'initial conditions or boundary conditions'. A singular solution is a solution that can't be derived from the general solution.

Examples

Existence and uniqueness of solutions

There are several theorems that establish existence and uniqueness of solutions to initial value problems involving ODEs both locally and globally. The two main theorems are the Picard–Lindelöf theorem and the Peano existence theorem.

Reduction to a first order system

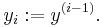

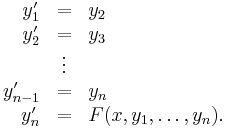

Any differential equation of order n can be written as a system of n first-order differential equations. Given an explicit ordinary differential equation of order n (and dimension 1),

define a new family of unknown functions

for i from 1 to n.

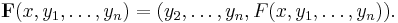

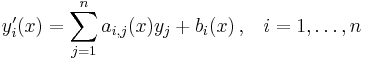

The original differential equation can be rewritten as the system of differential equations with order 1 and dimension n given by

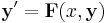

which can be written concisely in vector notation as

with

and

Linear ordinary differential equations

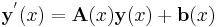

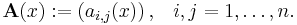

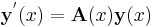

A well understood particular class of differential equations is linear differential equations. We can always reduce an explicit linear differential equation of any order to a system of differential equations of order 1

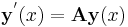

which we can write concisely using matrix and vector notation as

with

Homogeneous equations

The set of solutions for a system of homogeneous linear differential equations of order 1 and dimension n

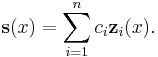

forms an n-dimensional vector space. Given a basis for this vector space  , which is called a fundamental system, every solution

, which is called a fundamental system, every solution  can be written as

can be written as

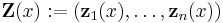

The n × n matrix

is called fundamental matrix. In general there is no method to explicitly construct a fundamental system, but if one solution is known d'Alembert reduction can be used to reduce the dimension of the differential equation by one.

Nonhomogeneous equations

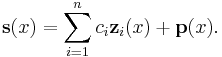

The set of solutions for a system of inhomogeneous linear differential equations of order 1 and dimension n

can be constructed by finding the fundamental system  to the corresponding homogeneous equation and one particular solution

to the corresponding homogeneous equation and one particular solution  to the inhomogeneous equation. Every solution

to the inhomogeneous equation. Every solution  to nonhomogeneous equation can then be written as

to nonhomogeneous equation can then be written as

A particular solution to the nonhomogeneous equation can be found by the method of undetermined coefficients or the method of variation of parameters.

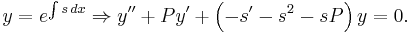

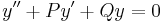

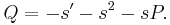

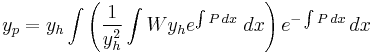

Concerning second order linear ordinary differential equations, it is well known that

So, if  is a solution of:

is a solution of:  , then

, then  such that:

such that:

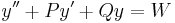

So, if  is a solution of:

is a solution of:  ; then a particular solution

; then a particular solution  of

of  , is given by:

, is given by:

.[1]

.[1]

Fundamental systems for homogeneous equations with constant coefficients

If a system of homogeneous linear differential equations has constant coefficients

then we can explicitly construct a fundamental system. The fundamental system can be written as a matrix differential equation

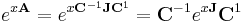

with solution as a matrix exponential

which is a fundamental matrix for the original differential equation. To explicitly calculate this expression we first transform A into Jordan normal form

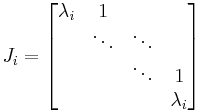

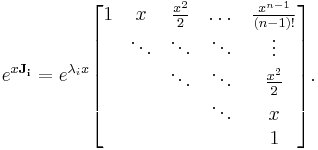

and then evaluate the Jordan blocks

of J separately as

General Case

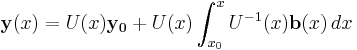

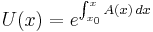

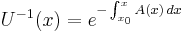

To solve

- y'(x) = A(x)y(x)+b(x) with y(x0) = y0 (here y(x) is a vector or matrix, and A(x) is a matrix),

let U(x) be the solution of y'(x) = A(x)y(x) with U(x0) = I (the identity matrix). After substituting y(x) = U(x)z(x), the equation y'(x) = A(x)y(x)+b(x) simplifies to U(x)z'(x) = B(x). Thus,

If A(x1) commutes with A(x2) for all x1 and x2, then  (and thus

(and thus  ), but in the general case there is no closed form solution, and an approximation method such as Magnus expansion may have to be used.

), but in the general case there is no closed form solution, and an approximation method such as Magnus expansion may have to be used.

Theories of ODEs

Singular solutions

The theory of singular solutions of ordinary and partial differential equations was a subject of research from the time of Leibniz, but only since the middle of the nineteenth century did it receive special attention. A valuable but little-known work on the subject is that of Houtain (1854). Darboux (starting in 1873) was a leader in the theory, and in the geometric interpretation of these solutions he opened a field which was worked by various writers, notably Casorati and Cayley. To the latter is due (1872) the theory of singular solutions of differential equations of the first order as accepted circa 1900.

Reduction to quadratures

The primitive attempt in dealing with differential equations had in view a reduction to quadratures. As it had been the hope of eighteenth-century algebraists to find a method for solving the general equation of the  th degree, so it was the hope of analysts to find a general method for integrating any differential equation. Gauss (1799) showed, however, that the differential equation meets its limitations very soon unless complex numbers are introduced. Hence analysts began to substitute the study of functions, thus opening a new and fertile field. Cauchy was the first to appreciate the importance of this view. Thereafter the real question was to be, not whether a solution is possible by means of known functions or their integrals, but whether a given differential equation suffices for the definition of a function of the independent variable or variables, and if so, what are the characteristic properties of this function.

th degree, so it was the hope of analysts to find a general method for integrating any differential equation. Gauss (1799) showed, however, that the differential equation meets its limitations very soon unless complex numbers are introduced. Hence analysts began to substitute the study of functions, thus opening a new and fertile field. Cauchy was the first to appreciate the importance of this view. Thereafter the real question was to be, not whether a solution is possible by means of known functions or their integrals, but whether a given differential equation suffices for the definition of a function of the independent variable or variables, and if so, what are the characteristic properties of this function.

Fuchsian theory

Two memoirs by Fuchs (Crelle, 1866, 1868), inspired a novel approach, subsequently elaborated by Thomé and Frobenius. Collet was a prominent contributor beginning in 1869, although his method for integrating a non-linear system was communicated to Bertrand in 1868. Clebsch (1873) attacked the theory along lines parallel to those followed in his theory of Abelian integrals. As the latter can be classified according to the properties of the fundamental curve which remains unchanged under a rational transformation, so Clebsch proposed to classify the transcendent functions defined by the differential equations according to the invariant properties of the corresponding surfaces f = 0 under rational one-to-one transformations.

Lie's theory

From 1870 Sophus Lie's work put the theory of differential equations on a more satisfactory foundation. He showed that the integration theories of the older mathematicians can, by the introduction of what are now called Lie groups, be referred to a common source; and that ordinary differential equations which admit the same infinitesimal transformations present comparable difficulties of integration. He also emphasized the subject of transformations of contact.

A general approach to solve DE's uses the symmetry property of differential equations, the continuous infinitesimal transformations of solutions to solutions (Lie theory). Continuous group theory, Lie algebras and differential geometry are used to understand the structure of linear and nonlinear (partial) differential equations for generating integrable equations, to find its Lax pairs, recursion operators, Bäcklund transform and finally finding exact analytic solutions to the DE.

Symmetry methods have been recognized to study differential equations arising in mathematics, physics, engineering, and many other disciplines.

Sturm–Liouville theory

Sturm–Liouville theory is a theory of eigenvalues and eigenfunctions of linear operators defined in terms of second-order homogeneous linear equations, and is useful in the analysis of certain partial differential equations.

Software for ODE solving

- FuncDesigner (free license: BSD, uses Automatic differentiation, also can be used online via Sage-server)

- VisSim - a visual language for differential equation solving

- Mathematical Assistant on Web online solving first order (linear and with separated variables) and second order linear differential equations (with constant coefficients), including intermediate steps in the solution.

- DotNumerics: Ordinary Differential Equations for C# and VB.NET Initial-value problem for nonstiff and stiff ordinary differential equations (explicit Runge-Kutta, implicit Runge-Kutta, Gear’s BDF and Adams-Moulton).

- Online experiments with JSXGraph

- Maxima computer algebra system (GPL)

- COPASI a free (Artistic License 2.0) software package for the integration and analysis of ODEs.

See also

- Numerical ordinary differential equations

- Difference equation

- Matrix differential equation

- Laplace transform applied to differential equations

- Boundary value problem

- List of dynamical systems and differential equations topics

- Separation of variables

- Method of undetermined coefficients

References

- ^ Polyanin, Andrei D.; Valentin F. Zaitsev (2003). Handbook of Exact Solutions for Ordinary Differential Equations, 2nd. Ed.. Chapman & Hall/CRC. ISBN 1-5848-8297-2.

Bibliography

- Coddington, Earl A.; Levinson, Norman (1955). Theory of Ordinary Differential Equations. New York: McGraw-Hill.

- Hartman, Philip, Ordinary Differential Equations, 2nd Ed., Society for Industrial & Applied Math, 2002. ISBN 0-89871-510-5.

- W. Johnson, A Treatise on Ordinary and Partial Differential Equations, John Wiley and Sons, 1913, in University of Michigan Historical Math Collection

- A. D. Polyanin and V. F. Zaitsev, Handbook of Exact Solutions for Ordinary Differential Equations (2nd edition)", Chapman & Hall/CRC Press, Boca Raton, 2003. ISBN 1-58488-297-2

- E.L. Ince, Ordinary Differential Equations, Dover Publications, 1958, ISBN 0486603490

- Witold Hurewicz, Lectures on Ordinary Differential Equations, Dover Publications, ISBN 0-486-49510-8

- Ibragimov, Nail H (1993). CRC Handbook of Lie Group Analysis of Differential Equations Vol. 1-3. Providence: CRC-Press. ISBN 0849344883.

- Teschl, Gerald. Ordinary Differential Equations and Dynamical Systems. Providence: American Mathematical Society. http://www.mat.univie.ac.at/~gerald/ftp/book-ode/.

- A. D. Polyanin, V. F. Zaitsev, and A. Moussiaux, Handbook of First Order Partial Differential Equations, Taylor & Francis, London, 2002. ISBN 0-415-27267-X

- D. Zwillinger, Handbook of Differential Equations (3rd edition), Academic Press, Boston, 1997.

External links

- Differential Equations at the Open Directory Project (includes a list of software for solving differential equations).

- EqWorld: The World of Mathematical Equations, containing a list of ordinary differential equations with their solutions.

- Online Notes / Differential Equations by Paul Dawkins, Lamar University.

- Differential Equations, S.O.S. Mathematics.

- A primer on analytical solution of differential equations from the Holistic Numerical Methods Institute, University of South Florida.

- Ordinary Differential Equations and Dynamical Systems lecture notes by Gerald Teschl.

- Notes on Diffy Qs: Differential Equations for Engineers An introductory textbook on differential equations by Jiri Lebl of UIUC.